Navigating the Storm - Effective Incident Response in Shared Kubernetes Clusters

Kubernetes serves as the backbone of modern cloud-native applications, providing scalability, flexibility, and automation for deploying and managing workloads. In many organizations, a single Kubernetes cluster is shared across multiple teams to maximise resource efficiency, reduce operational overhead, and simplify infrastructure management. However, when an incident occurs in such environment, security teams must navigate a minefield of challenges such as isolating affected workloads without disrupting business-critical applications, identifying the root cause in a highly dynamic infrastructure, and ensuring that attackers cannot exploit inter-team trust boundaries. This article provides a structured approach to handling incidents in shared Kubernetes clusters, specifically focusing on the recommended and not-so-recommended isolation and containment practices of the incident response framework.

Standalone vs Shared Cluster Environment

Kubernetes clusters can be deployed in various configurations, but two common approaches are standalone clusters and shared clusters. The choice between these architectures significantly impacts security, resource management, and incident response strategies.

In a standalone cluster setup, each team, application, or business unit operates its own dedicated cluster. This approach provides strong isolation, ensuring that security incidents remain contained within a single environment. From an incident response perspective, this means that in the event of a security breach, cluster's components (containers, nodes, even cluster in itself) can be decommissioned and replaced with a new one (providing all forensics has been collected and exported), minimising downtime and ensuring that the other teams’ operations and availability remain unaffected.

A shared cluster setup, on the other hand, allows multiple teams or applications to run within a single Kubernetes cluster. This architecture is cost-effective and simplifies operations by centralising management, but it introduces security challenges, such as ensuring proper namespace isolation, enforcing strict role-based access control (RBAC), and preventing lateral movement in case of a breach. In case of an incident in a shared cluster, the luxury of tearing down the existing cluster or its components to ensure the threat has been eradicated is not readily available due to the presence of multiple teams, critical workloads, and interconnected services relying on the same infrastructure. Instead, incident response must focus on effective containment, and targeted isolation to minimise impact while maintaining cluster availability.

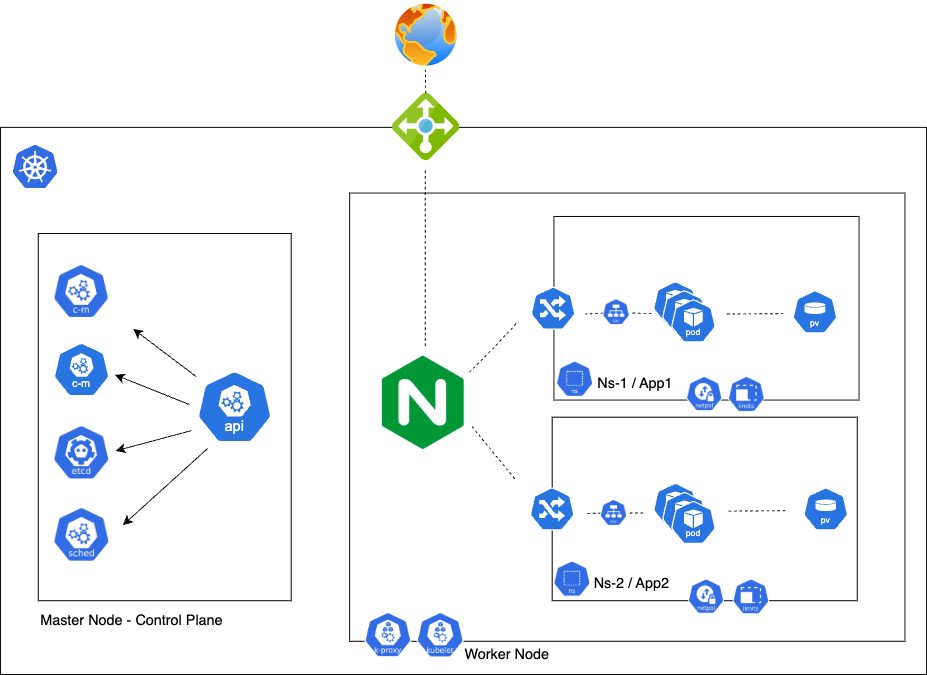

Below is an illustrated representation of a shared cluster, highlighting how multiple teams, workloads, and namespaces coexist within a single Kubernetes environment.

Assumptions

For the purposes of this article, we will assume the following scenario:

- A publicly exposed web application has been compromised, providing an attacker with an initial foothold inside a container running within our shared cluster.

- The cyber defense team has detected an active reverse shell connection.

- Shared cluster operates as in a "namespace-as-a-service" manner. Each application team receives a namespace where they run their application workloads.

- Cross-namespace communication between different application namespaces is prohibited.

The following sections will focus on immediate containment and isolation strategies that lead to succesful forensic collection balancing the availability and the stability of the cluster.

Recommended Practices

Network Connectivity as an Immediate Containment and Isolation Strategy

Why?

By cutting off network connectivity, we can prevent the compromised pod from communicating with other services, exfiltrating data, or spreading laterally within the shared cluster.

How?

The graph above details a "namespace-as-a-service" setup, where each pod is placed behind a service that load balances the traffic to the pod’s containers incoming from the ingress controller, in this case, Nginx.

Kubernetes Services (e.g., a LoadBalancer, ClusterIP, or NodePort) use label selectors to select which pods receive traffic. If a pod loses its labels, it is no longer matched by the Service, effectively removing it from load balancing. If this happens, the pod will not receive incoming traffic anymore. Therefore, to cut ingress, we need to remove all labels from the compromised pod. The below command can be used to achieve this, providing the pod uses "app=web" as a label:

kubectl label pod <pod-name> app-

Now that the ingress to the compromised pod is resolved, we should remove the egress as well. Depending on the setup, it is recommended to apply a Kubernetes NetworkPolicy to deny all egress and ingress traffic for the compromised pod except for security logging.

The compromised pod should first be labeled, since the networking policies work based off labels:

kubectl label pod <pod-name> quarantined=true

Then, the network policy which restricts egress traffic should be applied. The sample policy is listed below, however this will of course be different if the cluster is using service mesh, in which case the policy configuration will depend on the vendor.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: block-egress-for-compromised-pod

namespace: default

spec:

podSelector:

matchLabels:

quarantined: true # Target only the compromised pod

policyTypes:

- Egress

egress: [] # No outbound connections allowed at all

Why is this useful in incident response?

- Quickly removes network connectivity of the compromised Pod without deleting it.

- Does not affect other healthy Pods in a deployment.

- Allows forensic investigation while keeping the Pod running in isolation.

Role-Based Access Control (RBAC) Lockdown

Why?

When responding to a compromised pod in a shared Kubernetes cluster, one of the highest-priority actions in addition to limiting networking access is limiting its ability to perform privileged actions. In many security incidents, attackers exploit excessive RBAC permissions to communicate with control plane API and thus move laterally, escalate privileges, or execute malicious workloads.

How?

In Kubernetes, each pod runs under a ServiceAccount, which grants it specific API permissions to interact with the control plane API. Attackers often abuse over-permissioned ServiceAccounts to interact with the cluster.

To identify which ServiceAccount a pod is using:

kubectl get pod <pod-name> -o jsonpath='{.spec.serviceAccountName}'

If you're unsure whether fully disabling the ServiceAccount would break other workloads or if the ServiceAccount is used by multiple applications, and you want to gradually revoke access, you can proceed with removing RoleBindings from the ServiceAccount:

kubectl delete rolebinding <rolebinding-name> -n <namespace>

kubectl delete clusterrolebinding <clusterrolebinding-name>

If logs show suspicious API calls coming from the ServiceAccount or if you're dealing with ServiceAccount token theft and need immediate containment, you can try disabling the ServiceAccount by deleting its secret token:

kubectl delete secret $(kubectl get serviceaccount <serviceaccount-name> -n <namespace> -o jsonpath='{.secrets[0].name}') -n <namespace>

If you are certain that no other workloads use the ServiceAccount or if it was created by an attacker, you should remove it:

kubectl delete serviceaccount <serviceaccount-name> -n <namespace>

Why is this useful in Incident Response?

Without proper isolation, an attacker could use excessive permissions in one namespace to impact workloads in other namespaces or even compromise the entire cluster.

By acting quickly to restrict privileges, disable credentials, and remove unnecessary RBAC permissions, security teams can effectively contain threats while maintaining availability for unaffected workloads.

Node-Level Taints, Tolerations & Eviction

Now that the compromised pod has limited network and RBAC capability, let me introduce the concept of node taints, tolerations, evictions and how they should be leveraged in immediate incident response scenarios.

Why?

The concept of tainting a node in Kubernetes both helps isolate and manage workloads in the cluster. Tainting a node in Kubernetes marks it as unsuitable for scheduling certain pods unless those pods tolerate the taint, allowing you to control which workloads can run on that node. For example, any of the compromised pods can be labeled as compromised and should therefore be kept on the compromised node until the forensic evidence has been collected and root cause of the attack determined. This raises an important question, what do we do with other workloads on the node that so far hadn't trigerred any alerts and thus may not be compromised? Do we evict (force reschedule on a different node) them or not? The main reason behind this question is - risk behind spreading the attack onto different nodes and thus different workloads by force rescheduling the pods to start on a different node.

Since we know that there is an active reverse shell process running on the compromised container, cross-namespace communication is not allowed, the namespace is serving publicly facing workload, we will assume that the attack originated from a vulnerable web application which allowed for remote code execution on the container. However, depending on the circumstances and the cluster setup, it is worth keeping in mind that evicting the pod will force the pod to be rescheduled on a different node. This means that if one of the evicted pods is the source of attacker's foothold onto the cluster, the attack could spread on other nodes running different applications' workloads. This is why it's important to know the cause and origin of the attack, before evicting the pods that had been running on the same node as the compromised pod at the time of the attack.

How?

Identify the node the pod is running on:

kubectl get pod <pod-name> -o wide

Apply a Taint to the Node to Prevent New Workloads

kubectl taint nodes <node-name> quarantine=true:NoSchedule

To evict the pods, you can run the following command:

kubectl taint nodes <node-name> quarantine=true:NoExecute

This command will evict all the healthy pods and reschedule them on different nodes.

Why is this useful in Incident Response?

- Marks compromised nodes to prevent non-essential pods from being scheduled on them.

- Allow critical pods to continue running on affected nodes by using tolerations.

- Contain the spread of an attack by isolating affected workloads.

- Minimise downtime by rescheduling unaffected pods to other nodes.

Checking for Container Escapes

Why is it important to check for container escapes if we know that one of the pods has been compromised? Container escapes significantly broaden the scope of the attack, extending beyond the originally compromised container to the host system and potentially other containers within the cluster. This is why detecting and mitigating container escapes is crucial in preventing a compromised web app from leading to a broader compromise in a shared Kubernetes cluster.

Checking for container escapes during incident response (IR) in a shared Kubernetes cluster involves monitoring various indicators of compromise (IoC) and using tools to detect any suspicious activity that may indicate an attacker has attempted or successfully escaped from a container to the underlying host. Here's how you can approach this:

- Check for the applied constraints and policies using a policy enforcement tool like Gatekeeper to understand the platform's security posture and assess how difficult it would be for an attacker to escalate privileges or escape to the host.

-

Inspect Running Processes on the Node - Inspecting running processes on the node is crucial for detecting container escapes, as it helps identify unauthorized processes or malicious activity that may indicate an attacker has broken out of the container and is running code on the host system.

-

Verify File Integrity on the Node - Verifying file integrity on the node is important for detecting container escapes, as it helps identify unauthorized changes to critical system files that could indicate an attacker has gained access to the host system. If an attacker escaped, they may have modified binaries or installed backdoors.

- File Integrity Checks:

- Package Integrity Verification:

-

Look for Suspicious Files - Looking for suspicious files on the node is crucial for detecting container escapes, as it helps uncover any unauthorized or malicious files that may have been placed by an attacker after gaining access to the host system. Monitor directories like /tmp and /home for unexpected binaries or scripts.

-

Look for Unexpected Host File Access - Checking for unexpected host file access, such as reviewing /var/log/audit/audit.log or using auditctl to detect if a container accessed sensitive files like /etc/shadow or /root/.ssh/, is important for identifying potential container escapes and unauthorized access to the host system.

-

Monitor Kernel and System Modules - Monitoring kernel and system modules is crucial because unauthorized or malicious modifications, such as the loading of custom kernel modules, can signal a container escape or compromise of the host system; these changes can allow an attacker to gain higher privileges, maintain persistence, or execute arbitrary code outside of the container, potentially affecting the entire Kubernetes cluster.

-

Ensure There Are No Unexpected Users or SSH Keys - Ensuring there are no unexpected users or SSH keys is important because the presence of unauthorized user accounts or SSH keys on the node could indicate an attacker’s attempt to maintain persistent access, escalate privileges, or move laterally within the cluster; these accounts or keys can provide unauthorized access to sensitive systems and resources, aiding in further exploitation of the cluster.

-

Network Anomalies - Monitoring for network anomalies is important because it helps identify unexpected network connections from a compromised container, which could indicate the presence of malicious activities such as reverse shells or unauthorized data exfiltration; detecting unusual listening ports or suspicious traffic patterns can help quickly identify and mitigate threats in the cluster.

Techniques and Practices to Avoid

In certain incident response scenarios, there are techniques and practices that may inadvertently worsen the situation, such as:

- Blindly deleting compromised pods before understanding the attack. Deleting a compromised pod without full analysis can erase forensic evidence, making it harder to understand the attacker's actions and entry points. Instead, isolate the pod and collect data before removal.

- Ignoring resource-based isolation. Relying solely on network isolation (e.g., blocking egress) while leaving the pod with access to shared resources (e.g., persistent storage, service accounts) can allow attackers to continue operations. Use multiple layers of isolation, including taints, tolerations, and resource constraints.

- Evicting or rescheduling workloads prematurely. Moving workloads before fully understanding the scope of an attack can spread compromise to healthy nodes. Before eviction, verify that pods are not running malicious processes or misusing credentials.

- Assuming compromised credentials haven’t been used elsewhere. If a pod or node is compromised, assume its credentials (service accounts, API tokens, secrets) have been exposed. Rotate credentials and audit their usage across the cluster.

Using Security Tooling to Automate Detection & Response

In a shared cluster, automated detection and containment should be integrated, but with controlled execution to prevent unnecessary disruption to other teams. Runtime security scanning involves continuous monitoring of system behavior, enforcing security policies to detect and prevent threats such as container escapes, privilege escalation, and unauthorised file modifications.

Key Capabilities of Runtime Security Scanning include:-

System Call Monitoring & Behavioral Detection:

- Monitors system calls (syscalls) in real-time to detect unusual activity.

- Identifies suspicious process executions, privilege escalation attempts, and file modifications.

- Helps detect container escapes by monitoring unexpected interactions with the host.

-

File Integrity & Process Monitoring:

- Tracks modifications to critical files inside running containers.

- Detects execution of unauthorised binaries or scripts.

- Identifies unexpected process tree behaviors (e.g., reverse shells, crypto miners).

-

Network-Level Anomaly Detection:

- Monitors container network traffic for unusual outbound connections.

- Detects unauthorised lateral movement within the cluster.

- Blocks outbound connections to unapproved external destinations.

While automating response significantly improves security, false positives can lead to unnecessary disruptions, especially in a shared Kubernetes cluster where multiple teams rely on the same infrastructure. Overly aggressive containment can mistakenly isolate legitimate workloads, revoke necessary permissions, or block critical network traffic.

To balance security and availability, incident response automation must include human-in-the-loop mechanisms.

Conclusion

In conclusion, responding to incidents in shared Kubernetes clusters requires a strategic, multi-layered approach that balances the need for security with the operational demands of a highly dynamic environment. Containment techniques such as network isolation, RBAC lockdowns, and node-level taints are essential in reducing the attack surface and preventing further compromise, all while ensuring the continued operation of unaffected workloads. Throughout this process, it is crucial to collect forensic evidence without disrupting the environment, as this will inform a more effective and comprehensive response.

Equally important is understanding the full scope of the incident as soon as possible. A clear understanding of how the breach occurred and what the attacker’s objectives were will enable security teams to act swiftly and accurately, making informed decisions that limit the potential damage and avoid unnecessary disruptions to the rest of the cluster.

In the second part of this article series, I will delve into specific steps for preserving evidence during an incident response. These steps are critical for ensuring that valuable information is not lost and that you have the necessary data to conduct a thorough investigation and ultimately recover from the incident. Stay tuned!